By Dr Sheena Visram, Prof. Dean Mohamedally and Dr Atia Rafiq, MotionInput Games

MotionInput Games presents MotionInput, a new frontier in touchless gaming technologies, utilising gesture-based interactions that allow users to control their existing and new games through their own capabilities of body movements, facial expressions, speech and hand gestures.

Understanding MotionInput

MotionInput Games is a spinout company of University College London’s Department of Computer Science. The MotionInput Engine developed by MotionInput Games, utilises body movements, speech and facial expressions to control gameplay. This offers an innovative alternative to standard controllers, in existing PC and mobile games. By interpreting gestures via a webcam or embedded front facing camera, this technology allows people to play their libraries of games using simple motions, making gaming more engaging with their characters and game worlds, and more inclusive for those who may find traditional controllers difficult to use. The MotionInput Engine APIs are a vast catalogue of movement groupings, for various types of user interactions. They have been modelled on a wide range of users motions and combinations, especially with users that have accessibility needs. They are a part of a broader design toward creating touchless interactions that are accessible and adaptable, helping individuals of all abilities participate fully

How MotionInput enhances accessibility

The goal of MotionInput Games is to make gaming and digital interactions more natural, more engaging with movement and more inclusive to how a person can move. Conventional gaming setups can exclude those who face challenges using standard controllers due to physical, sensory, or cognitive disabilities. MotionInput addresses these barriers by letting players control games through their realms of combinations of gestures, such as nodding, waving, pointing, turning or even facial expressions. For example, what if head turning has limitations, hands cannot keep steady and have jitter, or hands that cannot open the fingers? There are models in the engine categories for each variation to be assessed with nuances of the users’ needs. This opens up gaming to a broadening audience, allowing everyone to enjoy the fun with social, creative, expressive and educational aspects of play in ways that suit their abilities.

Key Developers and Advocates

At the forefront of this movement is a team at University College London (UCL)’s Department of Computer Science, led by Professor Dean Mohamedally, who developed the MotionInput Engine software with over 300 academics and student developers from UCL. The software has been in development since COVID-19 in 2020, using a layered approach with several state of the art in AI and Computer Vision models from Meta, Google and many others. The software architecture is designed as a foundational library and API, to integrate with other software, bringing to the forefront the categorisation of human motion capabilities first.

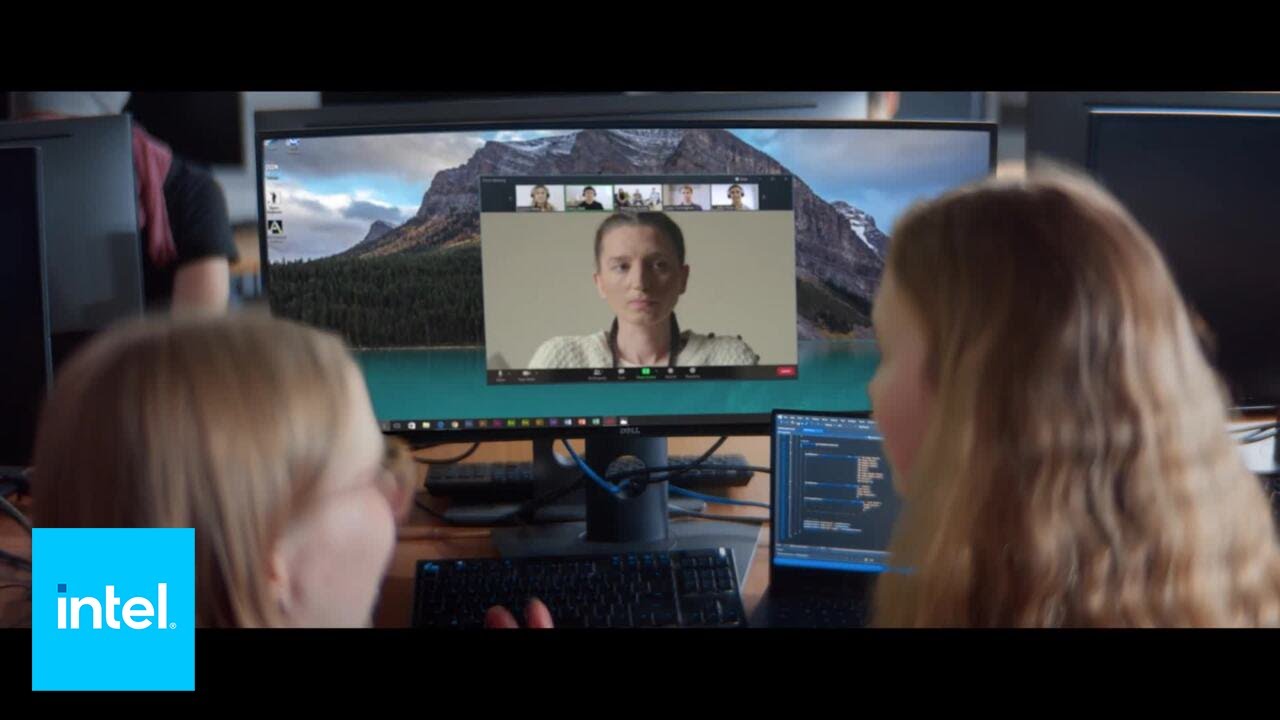

Created in collaboration with tech leaders like Microsoft and Intel, MotionInput allows users to override digital interactions without a controller or mouse, using movements, speech and expressions, in combinations that suit user and player needs. The collation and categorisation of user movements is vast. The team worked with dozens of organisations, like the International Alliance of ALS/MND Associations in the USA, the National Autistic Society in the UK, and the UK charity SpecialEffect, a charity that supports gamers with disabilities, to ensure this technology was designed from ground up to address a wide range of needs and is designed to include everyone.

Together, many charitable groups are working together to reshape modern gaming to become available to all. The key asset of the MotionInput technology is to drive re-publications of existing fantastic and creative outputs in the gaming economy, for a new generation to experience games with movements like never before, and to engage the equitable computing principle of leaving no-one behind in terms of accessibility.

Where MotionInput is being used

MotionInput’s technology is already finding its place in various educational and community settings. For instance, UCL teamed up with Code Ninjas, a coding club for children in North London, to introduce young learners to accessible game design. Through this program, children are able to use Scratch and Microsoft MakeCode to make games for other children, that are accessible by design. These child-led games developments use MotionInput for head gestures to play games like whack-a-mole and turn-based 2D games. In more advanced tutorials, creating a block-busting game like Tetris or Candy Crush can be experimented with wrist movements to control, or even as an exercise game by using your elbows to move around and knees to drop or click the blocks. This “hands-on” experience has taught children valuable digital skills of equitable computing design in gaming while promoting empathy and understanding about making technology inclusive.

The National Autistic Society (NAS)’s Sybil Elgar School has also incorporated MotionInput tools into their curriculum, to support students with autism in learning life skills. This technology creates an immersive, controlled environment where students can practice daily routines and build confidence in a way that feels safe and engaging. The success of these early demonstrations suggests that MotionInput can be a powerful tool for creating inclusive learning spaces and helping learners thrive. A first technical demonstration game, named Superhero Sportsday, was developed in Unity using MotionInput technology, and in four months it was deployed as a series of sporting challenges for the Autistic children at Sybil Elgar School. By far the wind surfing and hang gliding were the most popular activities – not requiring VR headsets, advanced expensive or complicated equipment and simple enough for the teachers to let the children start to play. Several new games titles are under development with the NAS, Intel and UCL Computer Science, in collaboration with MotionInput Games.

Beyond Gaming: Expanding MotionInput’s impact

Although MotionInput technology was originally designed with gaming in mind, its potential extends far beyond entertainment. Similar technologies are being developed to make use of games to play a role in healthcare, allowing patients to use gestures for interactive therapy sessions such as breathing exercises, or stretching in physiotherapy. In fields like education, MotionInput offers new ways for students with diverse abilities to engage with lessons in a way that feels natural to them.

Looking Forward: A more inclusive future for gaming, storytelling, character design and beyond

MotionInput Games are leading the way toward a future where new and existing digital content can be consumed in many modalities. By transforming how people interact with games and software in general, MotionInput technology is helping to create equitable computing environments where everyone, regardless of ability and can be a part of the storytelling of the digital worlds that they are using.

Touchless gaming design may soon become a new design process in games development, enabling inclusive digital interaction, engaging new IP content that starts to think about ways we can both mimic and generate new creative interactions of characters and stories that we love to enjoy.

Existing games that are being planned as re-releases from vendors should consider updates with accessibility needs. With a strong IP and character motions that are fun to imitate by all, MotionInput Games can help vendors bring in whole new experiences with minimal development time.

Working with games companies, MotionInput can get kids moving, the elderly active and those with accessibility needs getting to share in the gaming experience like never before.

Further Video Resources:

Further Web Resources:

https://www.intel.com/content/www/us/en/newsroom/news/interact-computer-without-touching.html